Testing your iOS apps at a low cost using LLMs

We have just built Agentic QA at Tuist, Tuist QA, and started doing some early testing with users. Since solutions built on LLMs started popping up, the team looked at the technology and the solutions that used it with curiosity and started thinking about how we could leverage them to solve painful problems in the ecosystem and bring a lot of value to the iOS community. At the same time, we wanted to build a solution that would incrementally build on past work (lower investment), while taking advantage of our expertise and foundations in the space/market in which we operate, Apple native development.

Devoting mental energy to LLMs and Apple's ecosystem needs, that's how we came across Acceptance Tests. If you've worked with them in your Xcode projects, you might have experienced the pain. Developing them is costly, although Apple is helping a bit with Xcode 26 with tools that automate writing them. Still, writing is only part of it. They are a runtime contract with your application's interface. Find a button and click it, or scroll until you find something, and then act on it. But one day, something changes, the contract is no longer met, and it doesn't necessarily mean the app doesn't do its job. It's just that the test needs to be updated, and since this is not something you do every day, the cost of refreshing your memory on how to fix the test is quite high. But that's not all. They take time to run, so you likely run them in parallel, but since they require multiple simulators, and there's a limit to how many you can run per macOS environment, this means sharding, and sharding means automation complexity you don't want to deal with, so you likely end up with sequentially executed tests that add up to a lot of minutes in every PR. By the time the time is too high, you lean on running them in main, distancing failures in time and space from the PRs that might have introduced legitimate failures, so the value of those tests diminishes significantly until the team just removes them.

The alternative? Have an in-house or external QA team. But this is equally costly and doesn't scale well. Concurrency is limited to 1 per tester. Moreover, they'll likely lack a lot of context to test the app effectively, unless they are in-house or they've been working with you for quite a while, such that they know well what the critical areas of the business are and can focus on those to ensure they don't break. Let's say they find issues and report them. They usually come in the form of a description of what they were expecting, what they got instead, and perhaps some screenshots or videos of the failure. The developer receives it, and they are left wondering where to start debugging. The video or screenshot should be enough, but it's not. You wish you had logs or handled errors or network requests, but all of that information is gone. You are unable to reproduce it, nor is the QA person, with whom you spend endless back and forth Slack messages or emails until you give up. The app is then shipped, and your error tracking platform reveals what the scenario was that led to the error. You'd have caught it with the right debugging information, but the tester had no easy way to provide you with that.

These tests are insanely valuable, yet extremely costly. What if we could make them less costly with LLMs? That's the assumption we started with, and it turns out that we can. We integrated Tuist with Namespace environments to spin up macOS environments where we'd download previews of your apps and start testing sessions in simulators using LLMs. Along the process we'd collect diagnostic data such as logs, so that on completion, we could not only provide you with the results, but also with everything that you need to diagnose the issue. The results are quite promising and it's truly mindblowing to see. Imagine incorporating not only the PR context into the testing session, but also information about the business, so that the technology is able to identify the most critical workflows. We'll have to iterate on it based on real scenarios, because... LLMs, but we hope to get to a point where we strike an amazing balance of insane value with little cost. I don't remember the last time I was so excited about a piece of work.

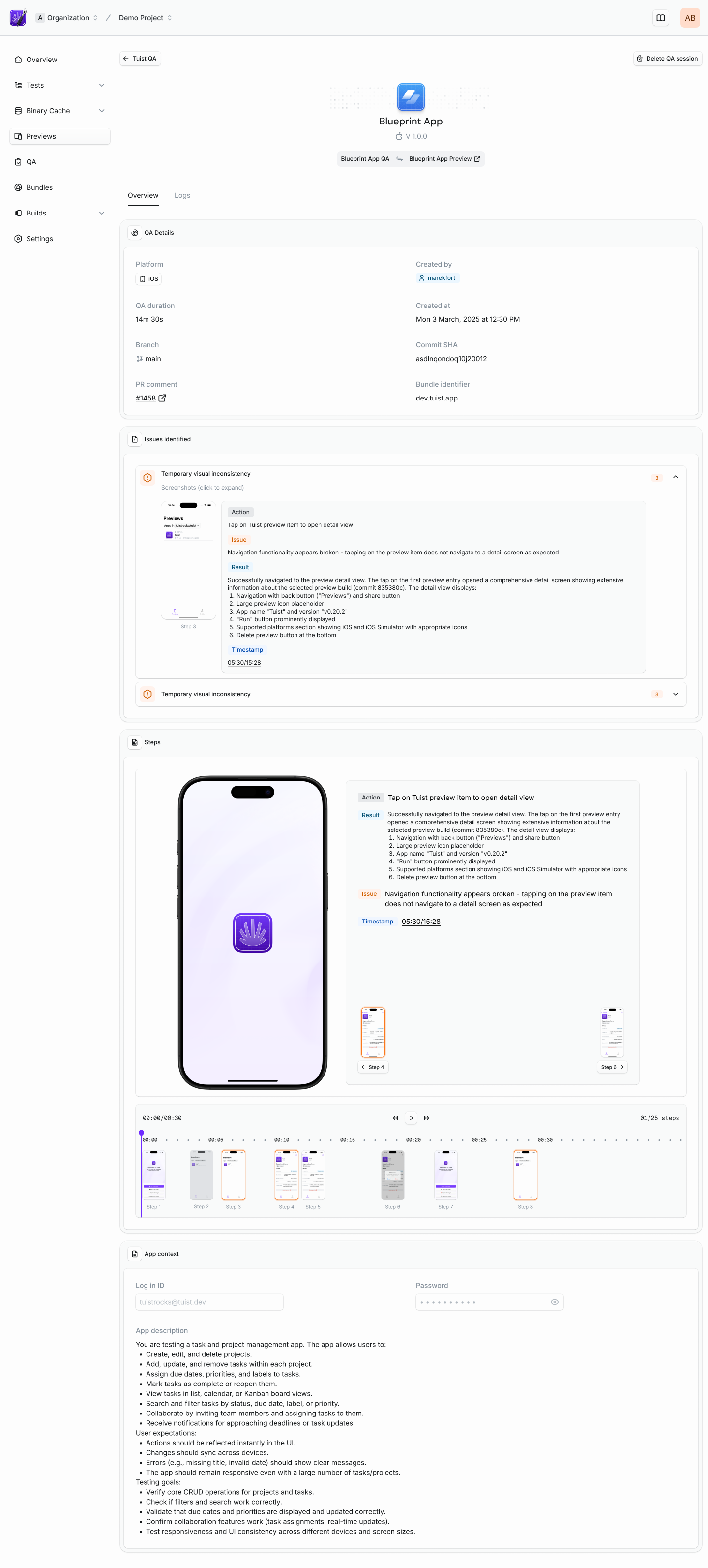

If you are interested, you can head over to tuist.dev/qa and we'll get you on a beta testing list. Here's a sneak peek of the report that your teams will get.

All you need to opt into Tuist QA is having an iOS app project, and it works with React Native too.

You can also reach out to me personally, pedro@tuist.dev, to know more about the feature.